Direct LiDAR Odometry: Fast Localization with Dense Point Clouds

K. Chen, B.T. Lopez, A. Agha-mohammadi, and A. Mehta, “Direct LiDAR Odometry: Fast Localization with Dense Point Clouds,” IEEE Robotics and Automation Letters (RA-L) vol. 7, no. 2, pp. 2000-2007, April 2022, doi: 10.1109/LRA.2022.3142739. Presented at ICRA 2022, Philadelphia, PA.

Paper: https://ieeexplore.ieee.org/document/9681177

Code: https://github.com/vectr-ucla/direct_lidar_odometry

Video: https://www.youtube.com/watch?v=4VF-TNnv6sk

Presentation: https://www.youtube.com/watch?v=APot6QP_wvg

Abstract

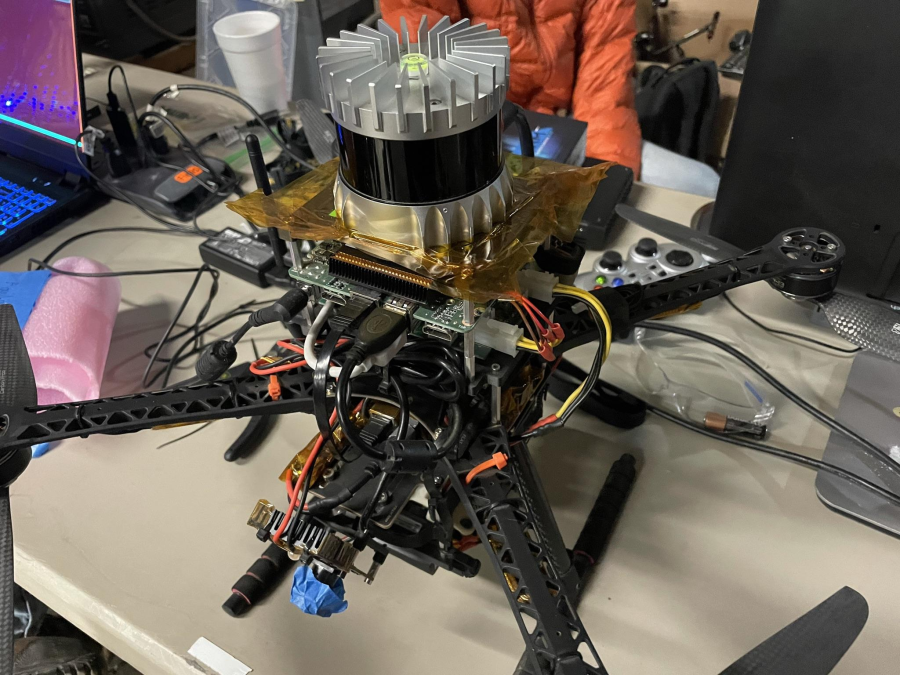

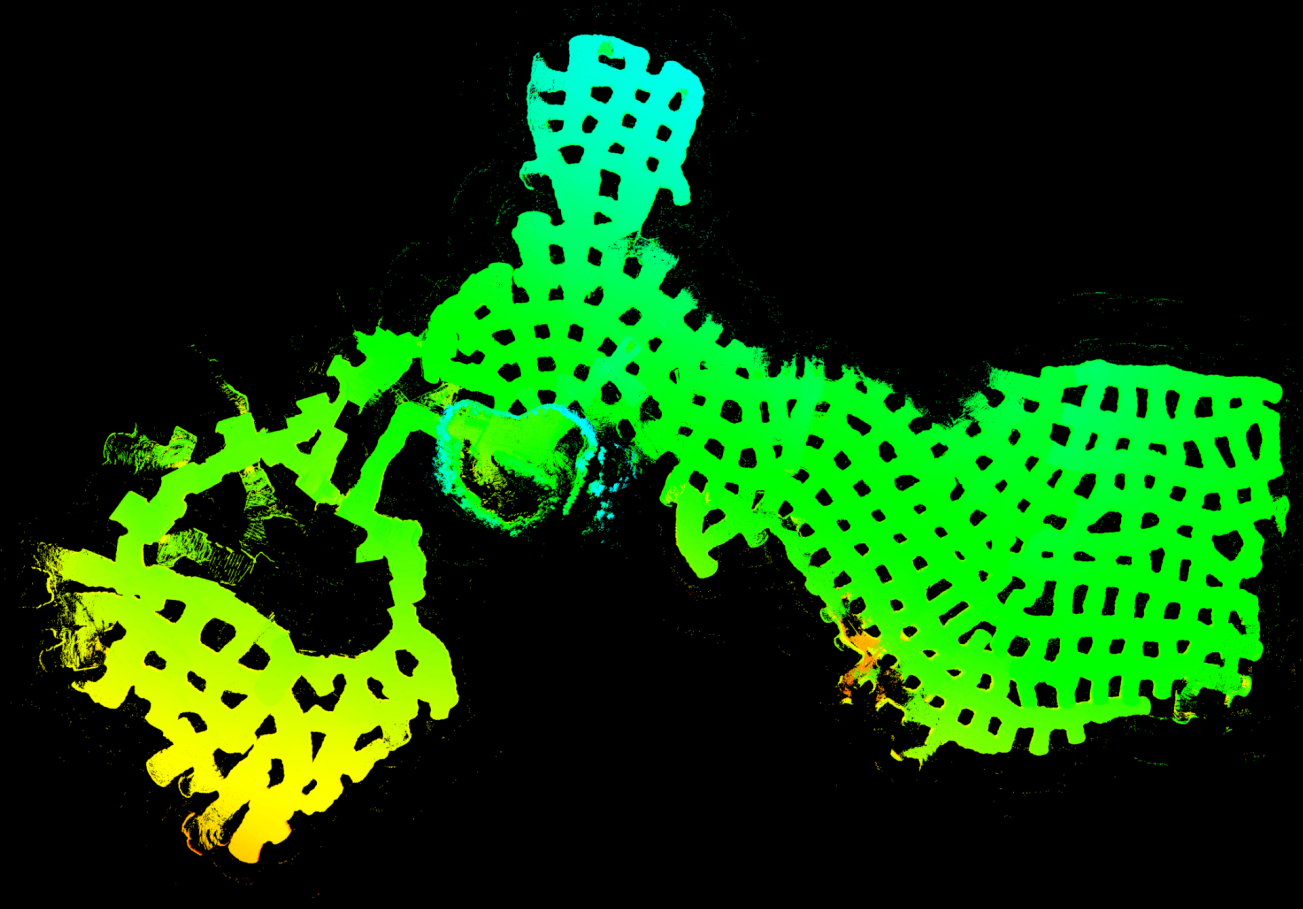

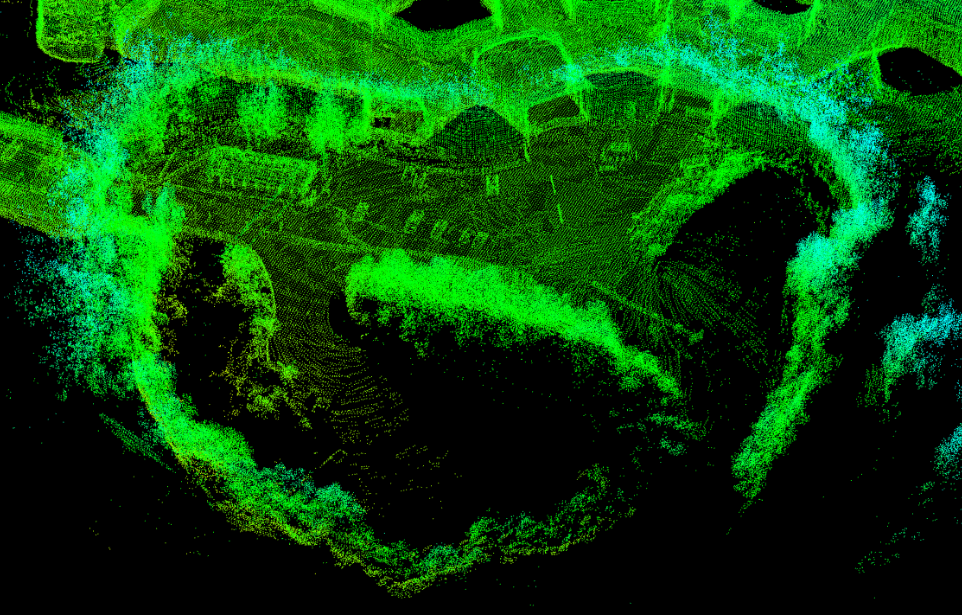

Field robotics in perceptually-challenging environments require fast and accurate state estimation, but modern LiDAR sensors quickly overwhelm current odometry algorithms. To this end, this paper presents a lightweight frontend LiDAR odometry solution with consistent and accurate localization for computationally-limited robotic platforms. Our Direct LiDAR Odometry (DLO) method includes several key algorithmic innovations which prioritize computational efficiency and enables the use of dense, minimally-preprocessed point clouds to provide accurate pose estimates in real-time. This is achieved through a novel keyframing system which efficiently manages historical map information, in addition to a custom iterative closest point solver for fast point cloud registration with data structure recycling. Our method is more accurate with lower computational overhead than the current state-of-the-art and has been extensively evaluated in several perceptually-challenging environments on aerial and legged robots as part of NASA JPL Team CoSTAR’s research and development efforts for the DARPA Subterranean Challenge.