Risk-Averse MPC via Visual-Inertial Input and Recurrent Networks for Online Collision Avoidance

A. Schperberg*, K. Chen*, S. Tsuei, M. Jewett, J. Hooks, S. Soatto, A. Mehta, and D. Hong, “Risk-Averse MPC via Visual-Inertial Input and Recurrent Networks for Online Collision Avoidance,” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, Oct 2020, pp. 5730-5737, doi: 10.1109/IROS45743.2020.9341070. *Indicates equal contribution

Paper: https://ieeexplore.ieee.org/abstract/document/9341070

Video: https://www.youtube.com/watch?v=td4K55Tj-U8

Presentation: https://www.youtube.com/watch?v=ZTGFaQT7cFM

Abstract

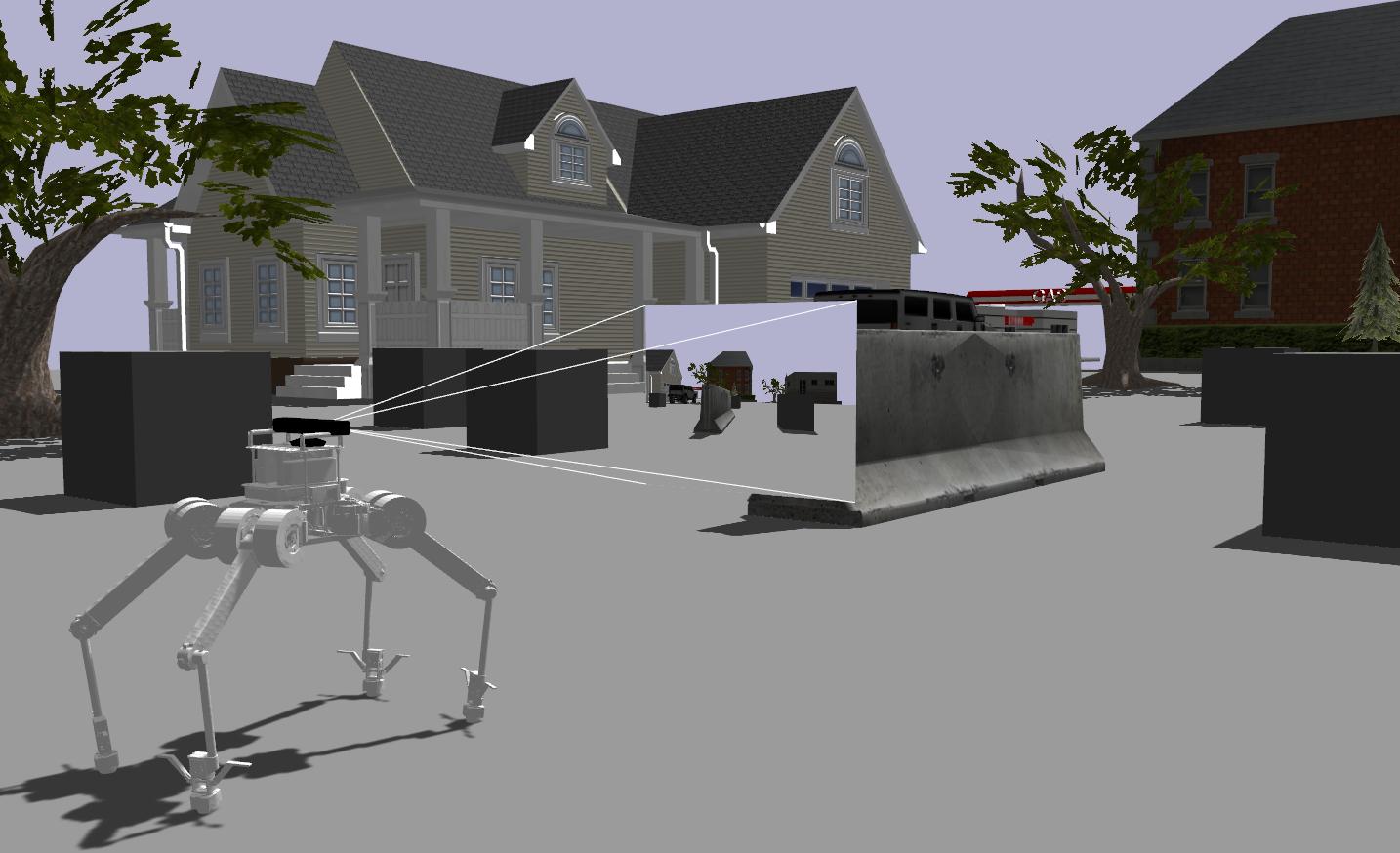

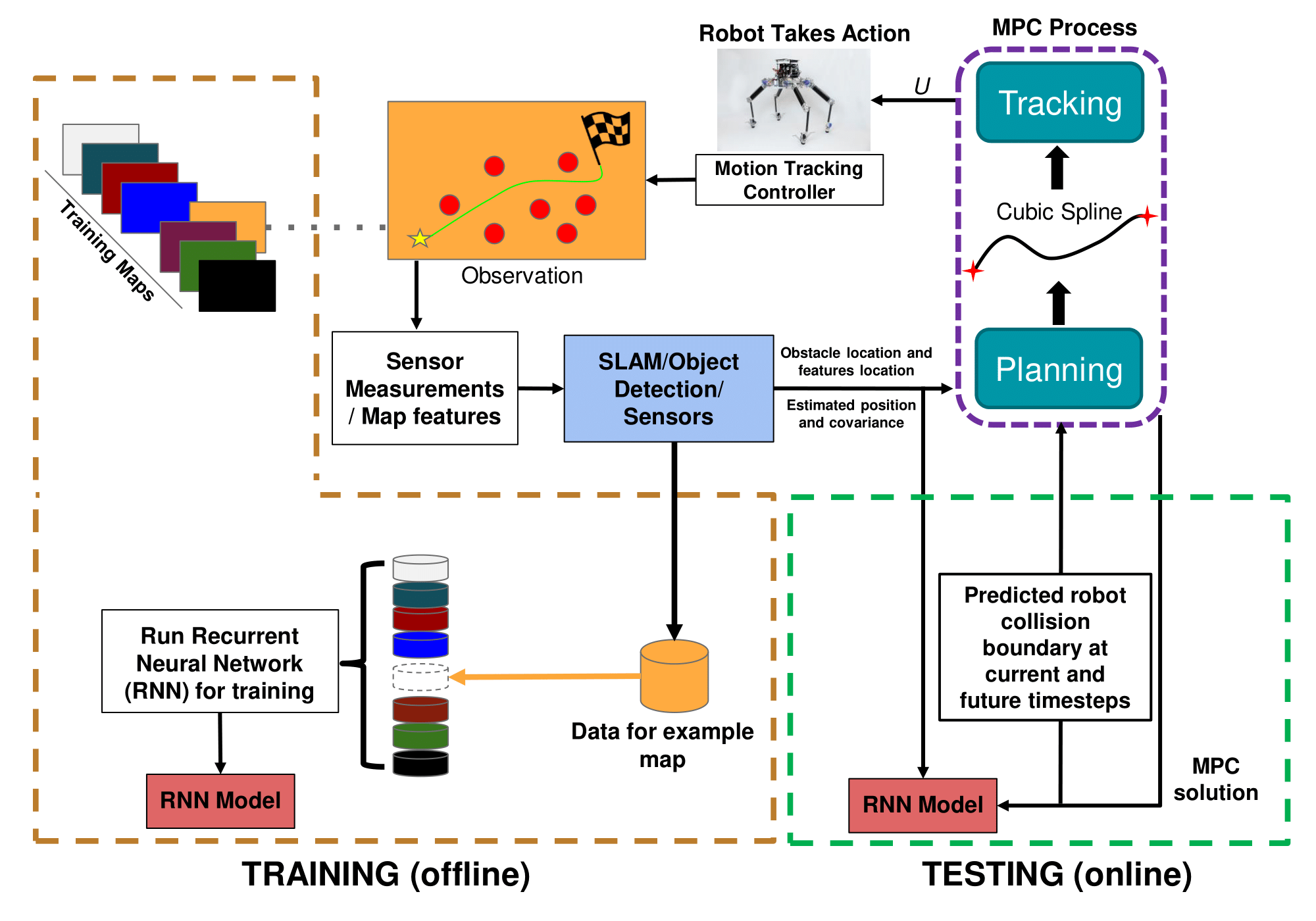

In this paper, we propose an online path planning architecture that extends the model predictive control (MPC) formulation to consider future location uncertainties for safer navigation through cluttered environments. Our algorithm combines an object detection pipeline with a recurrent neural network (RNN) which infers the covariance of state estimates through each step of our MPC’s finite time horizon. The RNN model is trained on a dataset that comprises of robot and landmark poses generated from camera images and inertial measurement unit (IMU) readings via a state-of-the-art visual-inertial odometry framework. To detect and extract object locations for avoidance, we use a custom-trained convolutional neural network model in conjunction with a feature extractor to retrieve 3D centroid and radii boundaries of nearby obstacles. The robustness of our methods is validated on complex quadruped robot dynamics, which demonstrate autonomous behaviors that can plan fast and collision-free paths towards a goal point.