Patient-Specific Pose Estimation in Clinical Environments

K. Chen, P. Gabriel, A. Alasfour, C. Gong, W.K. Doyle, O. Devinsky, D. Friedman, L. Melloni, T. Thesen, D. Gonda, S. Sattar, S. Wang, and V. Gilja, “Patient-Specific Pose Estimation in Clinical Environments,” IEEE Journal of Translational Engineering in Health and Medicine, vol. 6, pp. 1-11, 2018.

Paper: https://ieeexplore.ieee.org/document/8490852

Code: https://github.com/TNEL-UCSD/PatientPose

Video: https://www.youtube.com/watch?v=c3DZ5ojPa9k

Abstract

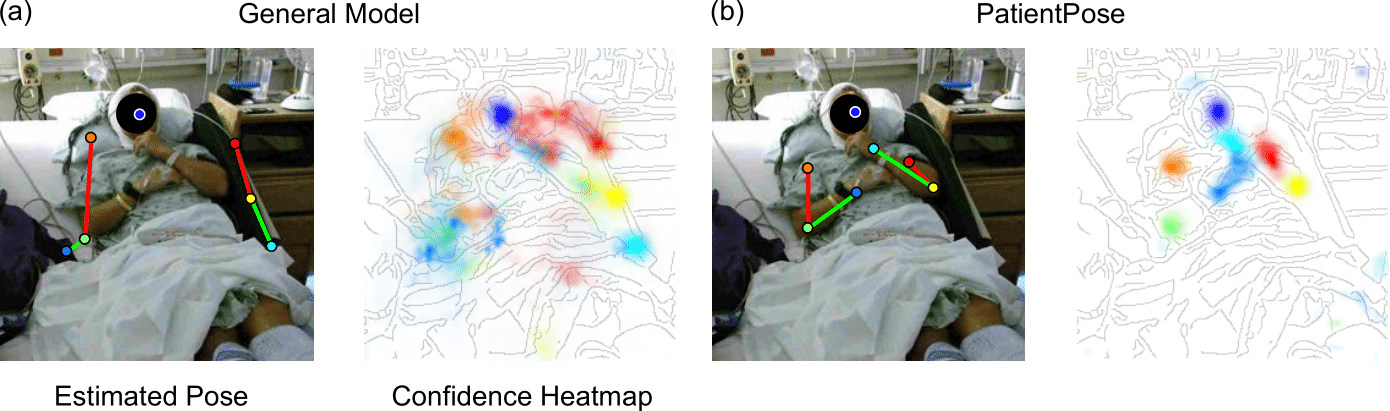

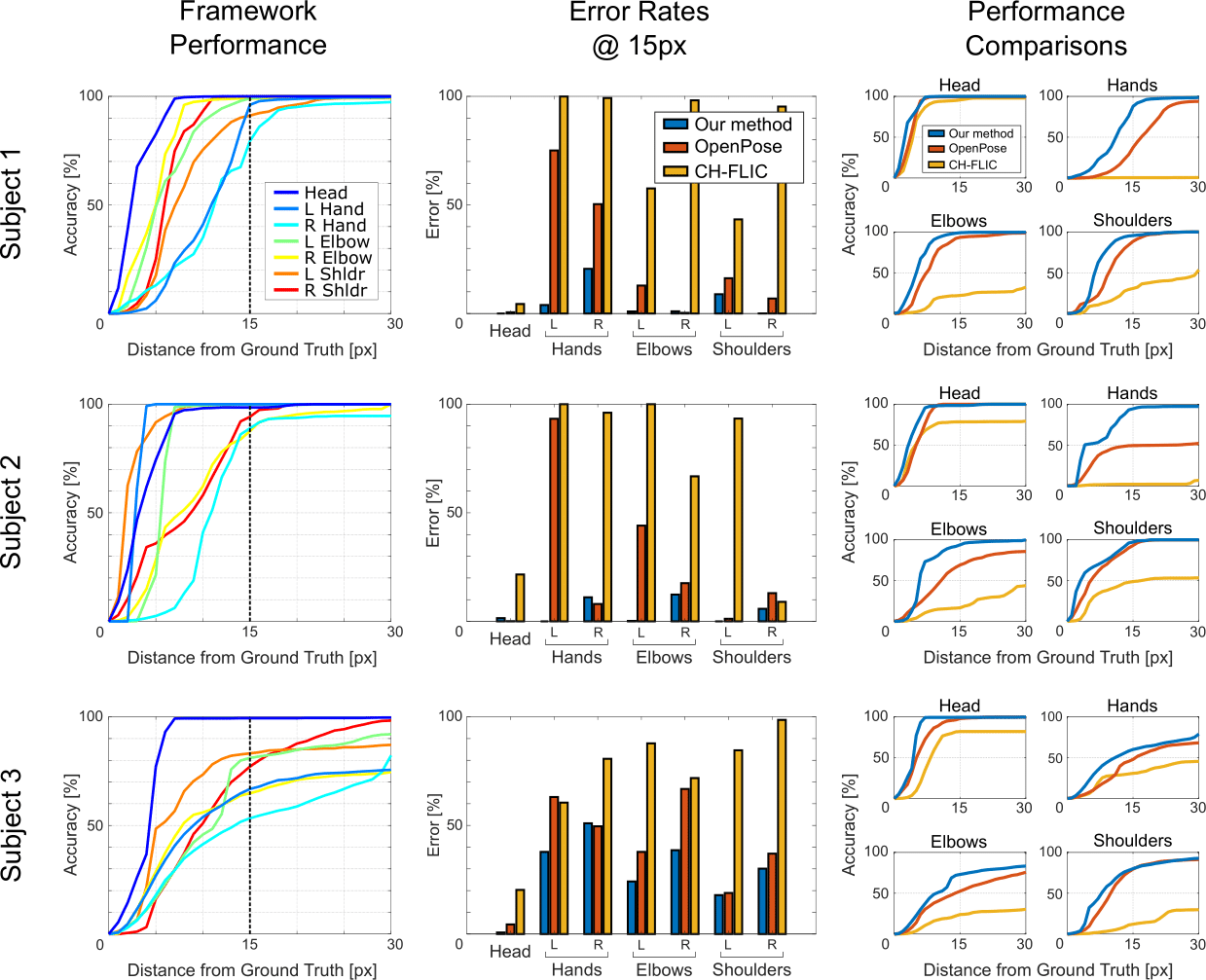

Reliable posture labels in hospital environments can augment research studies on neural correlates to natural behaviors and clinical applications that monitor patient activity. However, many existing pose estimation frameworks are not calibrated for these unpredictable settings. In this paper we propose a semi-automated approach for improving upper-body pose estimation in noisy clinical environments, whereby we adapt and build around an existing joint tracking framework to improve its robustness to environmental uncertainties. The proposed framework uses subject-specific convolutional neural network (CNN) models trained on a subset of a patient’s RGB video recording chosen to maximize the feature variance of each joint. Furthermore, by compensating for scene lighting changes and by refining the predicted joint trajectories through a Kalman filter with fitted noise parameters, the extended system yields more consistent and accurate posture annotations when compared to two state-of-the-art generalized pose tracking algorithms for three hospital patients recorded in two research clinics.